The Swiss Cheese Model: How Infosec Must Learn From Pandemic Response

Content warning: Contains brief and unspecific discussion of multiple kinds of abuse (partner, parental, employer)

As a cybersecurity professional and a person who continues to take the ongoing pandemic very seriously, I believe strongly in using defence in depth tactics — also known as "the Swiss Cheese Model" — in the interests of our fight against threats both digital and viral. Every security professional, every tech worker and indeed everyone should take up this tool, both in their technical labour and so-called "real world" lives.

But the industry (and here I am talking about cybersecurity in particular, though this is not unique to my domain by any means) rejects this tooling, whether in pursuit of keeping users safe or our own colleagues, friends, and families. It has given rise to an attitude of indifference to threats that do not have fancy monikers, or threats that are merely biological.

This manifests itself in various ways.

Major security conferences have begun allowing the entities and people who are our users' greatest threats to attend as honoured guests, presenters, and even keynote speakers. These same conferences have aggressively done away with pandemic mitigations — a DEFCON or two ago saw badges created for people who acquired a COVID-19 infection at the conference.

By allowing representatives of the United States government, or "former" Unit 8200 employees, to speak at important cybersecurity community events, we signal to the people we are meant to be keeping safe that we are more interested in addressing theoretical threats or even prosecuting the "New Cold War", than we are in facilitating the digital and physical safety of those who use our products.

This is true regardless of the user-base of these products — ultimately, even buried deeply in the bowels of a megacorp, we are tasked with protecting not just the profit generation machine, but the wellbeing and safety of our coworkers. Combining this with making light of the ongoing pandemic — let alone ignoring it altogether—we indicate that our commitment to security stops once our paycheque or fame or particular social mode of interacting is on the line.

This World of Ours

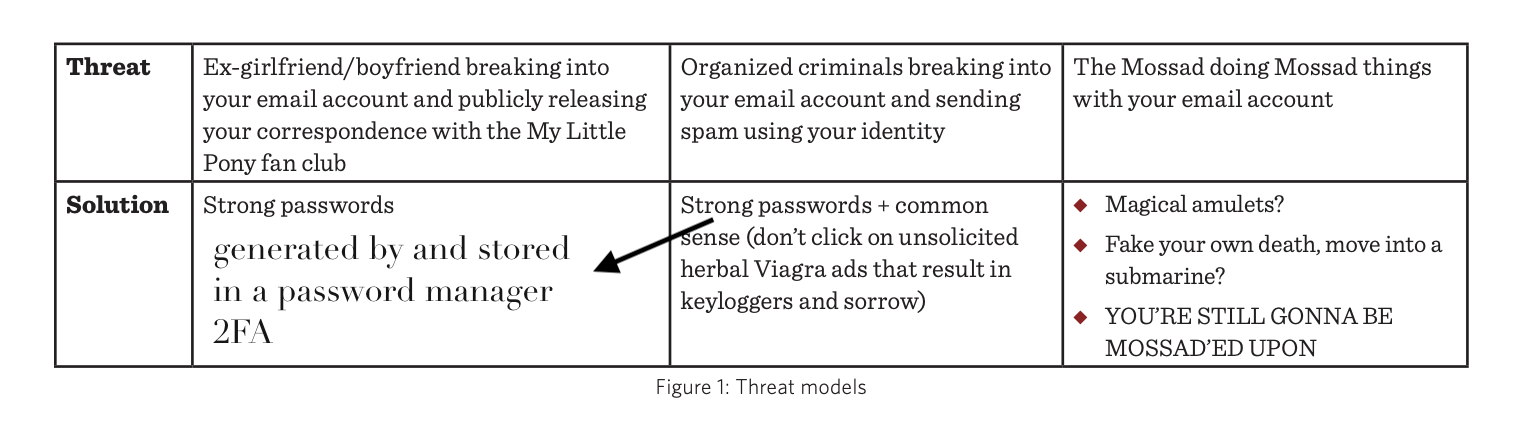

When I deliver security training at work, I often reference the inimitable James Mickens' "This World of Ours (PDF)" essay. I share his diagram, slightly modified for our world of password managers and widely-deployed two factor authentication (2FA), which you can see below.

I try to impress upon my colleagues that basically every single day, we will see our users encountering columns 1 and 2. At my job I am the lone security engineer, and I am talking with an audience that may have little to no security expertise, no matter if they're in a technical domain or not. I have to explain in my hour-long session that the reason we do things that might seem onerous (more on this in a bit) is that we are creating multiple layers of defence – that we have systems and are putting policies and procedures in place to not only keep one another safe, to keep our business and our product safe, but also to keep our users as safe as we can.

I also try to impress upon them that security is a “force multiplier”, not a blocker — that by taking action now, by making digital security a habit, we can make it harder for disaster to strike later. And by doing that, we make the third box even more unlikely, because we either don’t have information that government entities want or we limit the ways in which our own product can be used for nefarious purposes.

Swiss Cheese

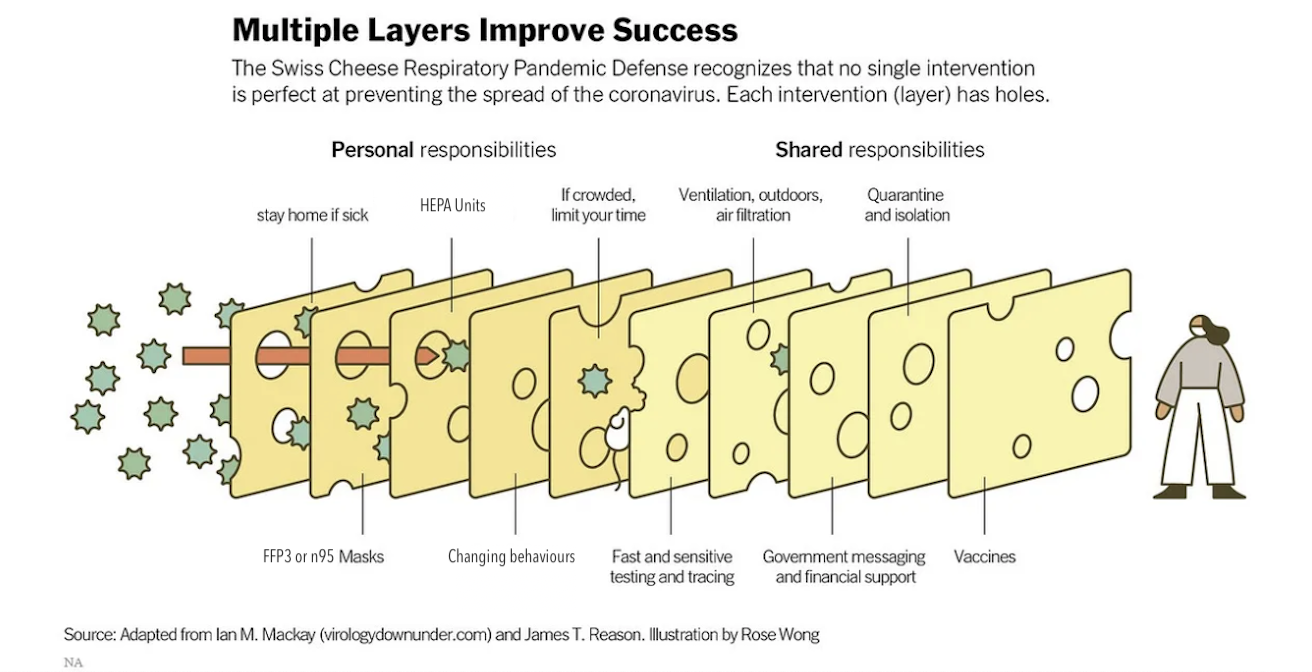

The same holds true for pandemic response. You may or may not be familiar with this image, which I have adapted slightly for 2025:

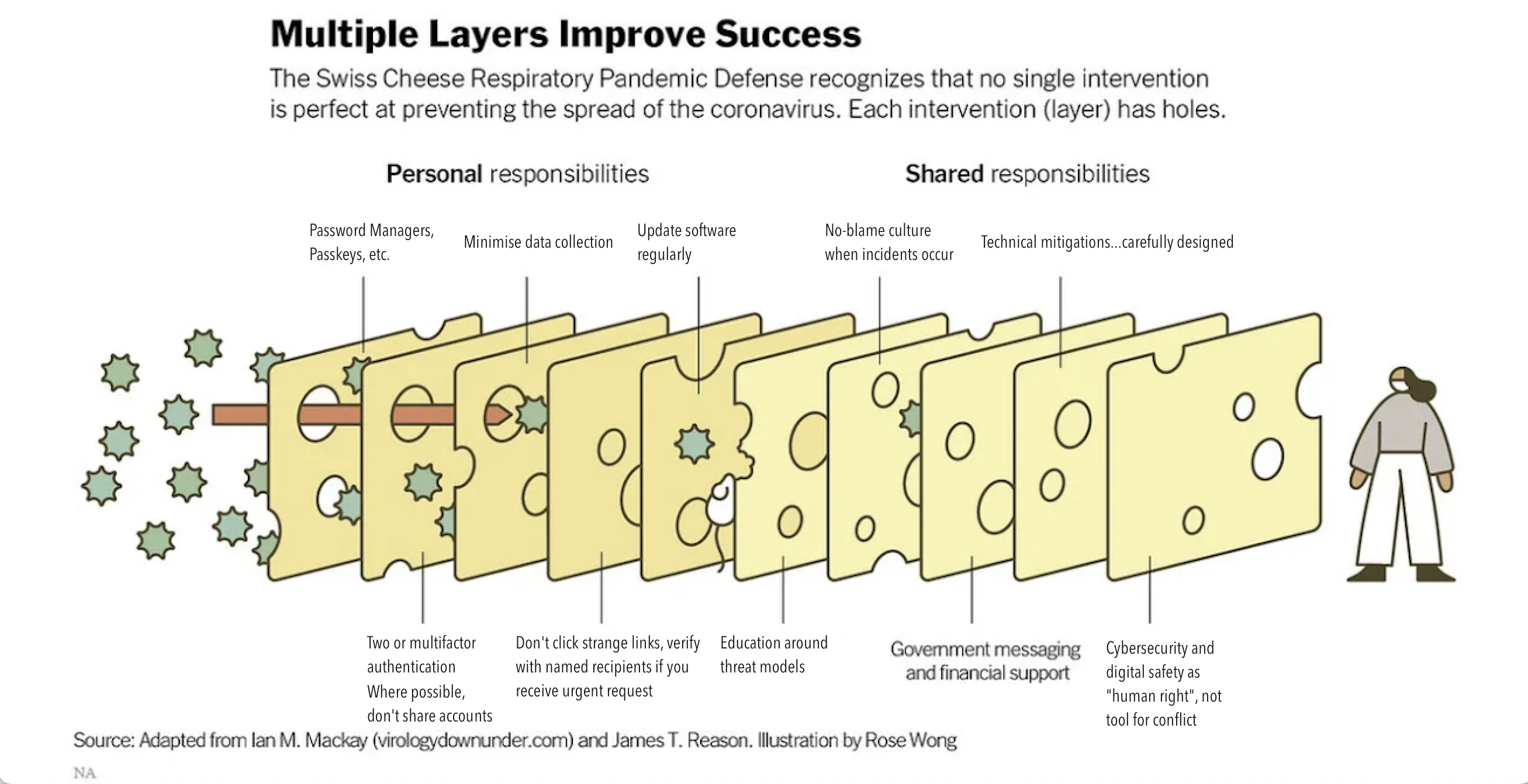

We can adapt this for digital security as well:

(Note that in both cases these are non-exhaustive lists.)

As you can see, in both versions of the graph we rely on a combination of technical tooling and education/policy to protect one another. This flies in the face of our current situation, in which technical tooling and education/policy is used to protect profits at the expense of our being able to live truly self-actualised lives. We are told that masks are scary, that security is “too hard”, that companies or government entities that use pandemic-driven-eugenics or New Cold War driven weakening/distorting of digital security processes have our best interests at heart. As people who maintain and operate the technical means of production, we have to decide if we are willing to let that stand.

The Current State of Things

The current state of things is a manifestation of opportunism and conflicts fundamentally with our stated goals as security professionals. When I go in search of an external pentest company to evaluate the security of the application I work on, I have to spend hours in meetings about novel phishing test solutions before people will talk with me about application security. When I go in search of tooling to improve security posture, even open source products, I am beset on all sides by security vendors who proudly brag about hiring or receiving funding from intelligence agencies. Security is seen as a way to extract more money from clients, whether through the "SSO tax" or extra fees for fraud-prevention or the so-called “shared responsibility model”, where anything that goes wrong is your fault, and maybe you should have spent the money for the security features we’re so proud to provide. It is negative and objectively has the effect of helping the people who want to harm us and our users.

When we make security onerous, when we focus on mitigations for theoretical attacks that can only — for the moment — be executed by state-level actors, who have an unlimited toolset and have no need to take advantage of an incorrectly configured checksum protocol in a pacemaker’s chipset to harm us, we help an abusive partner who can use a lack of 2FA to spy on an account, or a parent who wishes to spy on their child’s communications, or an employer who uses their security tooling to prevent unionisation. That is why they welcome — and indeed often mandate — certain kinds of security tooling, or provide ample funding to so-called “freedom” tools like TOR, Signal, and so on. Such being the nature of things as it is today, we must build something new and different and better, and I believe the Swiss Cheese Model can show us the way.

Principles for a New Security Industry

To that end, I would like to propose a series of principles for a new security industry, with the idea being that they can be applied equally to security and mitigation of the ongoing — and future — pandemic(s). I will use “users” as a broad term to mean ourselves, those around us, our coworkers, the people who actually use our stuff, our families, our friends, and so on. In Wikipedia parlance this is “users, broadly construed.” While these are numbered, this is to make it easy to refer to them later, rather than any particular priority. I believe all of these steps to be crucial.

1. We owe it to our users to criticise, thoroughly, our own industries. To do otherwise is to harm both our users and the industries in which we exist.

2. Where possible, this should be done publicly and productively. Security or safety by obscurity is ineffective and counterproductive.

3. We must consider threat models beyond our own.

4. We must do away with the notion that collaboration is less desirable than individual, “you do you” styles of operating. The only way we build a safer (digital/physical) world is together.

5. We must prioritise actually doing the things that keep us safe.

6. We must react strongly and truthfully to incorrect notions.

There is a prevailing attitude in some circles that people who “know better”, be it about security or pandemic response, are somehow superior.

7. We must actively reject this wherever we see it, and remember that we have an obligation to teach.

8. As best we can, we must act to prevent harm.

This is a dangerous statement in the wrong hands, because “harm” is a nebulous and easily misused term. What I mean here is when we see someone acting in a way that threatens our users, we must be compelled to do what we can to mitigate it. An example is necessary: some security teams will conduct “phishing tests” on their coworkers as a way of analysing their own performance. This is short-sighted and counterproductive, because it trains other people to not trust the security team, and causes distress/fear for our users. The Swiss Cheese Model advises a different way of preventing harm from phishing: education, technical mitigations, open/zero-blame culture, and so on.

9. We have to decide to actively pursue a safer world and make plans to put that into action.

10. No matter how influential we are, we must remember that the work comes first. Your CVE list is useless on an empty planet.

11. We must be aware of our own mistakes and seek to correct them.

The best time to start wearing a mask is now. The best time to roll out TLS fleet-wide is now. The best time to add DMARC to all of your domains is now. The best… you get the picture.

What does this mean in practice?

Let us ponder for a moment the wondrous thing that is Transport Layer Security, or TLS. This is a cryptographic protocol that has — with the advent of Let’s Encrypt — radically changed and improved safety on the internet, in ways that a user will almost never need to notice. I consider it close to Arthur C. Clarke’s “technology indistinguishable from magic” in operation, because through various properly configured cryptographic incantations we render internet traffic meaningless to an adversary or other malicious entity. Most websites you visit in 2025 will protect your traffic in transit, something that was almost unthinkable in living memory.

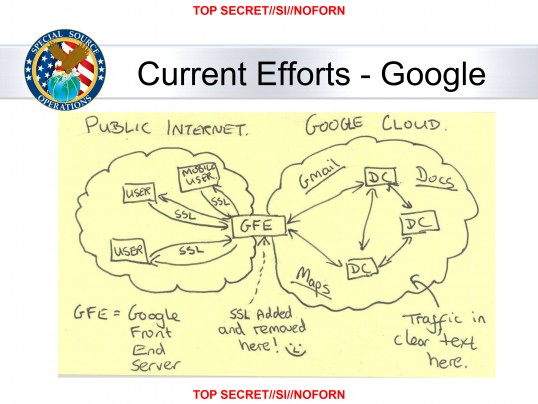

We could do the same for pandemic safety. Imagine widely deployed UGVI emitters on public transit, or a mask company putting real effort into normalising n95/FFP3 grade respirators as a piece of protective clothing that is also cool and fun to wear — a Ray Ban of masks sort of thing — and so on. We know that these tools can be used to drastically and dramatically improve everyone’s health. But as the Swiss Cheese Model reminds us this is not enough. Consider the following slide from the Snowden leaks:

Defense in depth means remembering that little smiley face in the middle there, next to “SSL added and removed here!”, before the clear-text cloud that follows. TLS is technology as magic, but what are we doing with the data that reaches our own servers? How secure is this very document? How secure is the device on which you’re reading it? Can we trust the certificate authorities? We have so much work to do and so little time.

Building a Better World

I continue to believe — much as there are more people who are still taking the pandemic seriously than we might otherwise believe — that there are more people out there who are interested in this way of doing things than it appears. There are people who are interested in security who do not have backgrounds in military intelligence, people who would like to build security tools that are usable by everyone. We can create secure user interfaces that are a joy to use, that keep users safe without scaring or confusing them.

Years ago at a conference I heard a story about how a company was bewildered at the frequency with which their users requested password resets, and became concerned that something untoward was going on. They investigated and discovered that instead what was happening was: their users were creating complex passwords, setting them, and then when they needed to login again they would simply request a reset, set a new complex password, and so on. When we treat our users as people who do actually deserve secure, accessible, performant, easy to use digital experiences, just as we must create safe, well ventilated, accessible, etc physical spaces, we can start building that better world right here and now. Sure, the old world is dying and the new one struggles to be born, but that does not mean we have to be the monsters.

Rather than a world in which the industry pivots to using so-called “AI” tooling, which in turn is used to generate security bullshit (a technical term of art) and slightly better and more fear-inspiring phish-test methods, we can build a security industry better suited to the real material conditions of the world around us. We can make sure our security tools work on old phones, in unstable internet conditions, with irregular access to electricity. In the same way that respirators and air quality improvements ought to be ubiquitous, we can do the same for security enhancements both on our users’ end and our own systems, our own projects, and beyond.

This conference serves as evidence that this is possible. Feel free to ping me on the fediverse (@text@posting.institute) or via email (noah @ posting dot institute) if you would like to discuss this further.

noah is, for his sins, an information security professional of some years of experience. he is also part of the strange and wonderful autonomous collective that puts together the covid.tips and covidhelp.org (and covidhelp.org/uk) resources and is involved in several other kinds of activism.

Originally written for FluConf 2025, and I owe them many, many thanks for making me finally write all this stuff down.

The Swiss Cheese Model: How Infosec Must Learn From Pandemic Response © 2025 by noah is licensed under CC BY-NC-SA 4.0. To view a copy of this license, visit https://creativecommons.org/licenses/by-nc-sa/4.0